Vince Power

Vince is a guest blogger for Twistlock who has a focus on cloud adoption and technology implementations using open source-based technologies. He has extensive experience with core computing and networking (IaaS), identity and access management (IAM), application platforms (PaaS) and continuous delivery.

What we can say is that serverless platforms can help make workloads more secure. As an option to deploy small components that can perform a specific function and be chained together to form full-functioning services, security-minded developers have gained a useful new tool.

In this way, serverless can be used to abstract yet another layer from the infrastructure, enabling DevOps teams to stop worrying about keeping the compiler and runtime for their language up-to-date and patched against all known security vulnerabilities. That’s great from a security stance.

You could sum up the security advantages of serverless this way: What containers did for ensuring the operating system and hardware layer could be maintained and secured separately, serverless offers the same at the application and web server layer.

And yet ― serverless is no silver bullet from a security perspective. Serverless environments still need to be designed and managed with security in mind at every moment.

Toward that end, this article discusses the typical security risks associated with serverless computing and tips for addressing them.

Serverless Still Needs Input ValidationAs with any application built and deployed, serverless applications process and transfer data. That data can arrive as part of the event that kicks off processing or can be retrieved from a data source as part of the application’s functionality.

Proper input validation does more than make sure area codes are properly formatted. Input validation stops some of the most common types of attacks that applications face. In the case of serverless applications, the most common type of injection attack is SQL Injection (SQLi).

SQL Injection attacks involve inserting code into a request that is designed to either return too much data or destroy data.

A practical example of input validation is if your function expects a phone number to be passed in as part of the payload ― it will then return all orders for that phone number.

Here is a good example of a SQLi injection attack:

// Input JSON// { “phonenumber”: “5065551212” }

// Relevant Code will just return what the phone number matches

sql = "SELECT * FROM orders WHERE phone = " + connection.escape(phonenumber);

connection.query(sql, function (error, results, fields) {

callback( null, results );

Returning way too much data…

// Input JSON now has the SQL character for match all results// { “phonenumber”: “%” }

// Relevant Code is exactly the same but will return all orders

sql = "SELECT * FROM orders WHERE phone = " + connection.escape(phonenumber);

connection.query(sql, function (error, results, fields) {

callback( null, results );

With a simple Regex for input validation:

// Input JSON now has the SQL character for match all results// { “phonenumber”: “%” }

// Relevant Code with an additional library that validates phone numbers

var PhoneNumber = require( 'awesome-phonenumber' );

var pn = new PhoneNumber( phonenumber, “US” );

// The number isn’t valid so it will execute what is in the else condition

if (pn.isValid()) {

sql = "SELECT * FROM orders WHERE phone = " + connection.escape(phonenumber);

connection.query(sql, function (error, results, fields) {

} else {

results = "ERROR - invalid phone number”

}

callback( null, results );

More types of injection attacks are detailed in an article posted by Acunetix .

Secrets Still Need to Be SecretWhen leveraging application development components like API gateways and cloud services like SQS on AWS, there is a move towards keys and certificates in the authentication and authorization spaces. As these aren’t traditional usernames and passwords, they can often be overlooked and checked into source repository systems. By leveraging vaults inside the different cloud platforms that are presented to the individual functions as environment variables, you can avoid having this problem.

If you are curious whether you have checked in any secrets into your code, there are tools like Truffle Hog that can help search your history on GitHub.

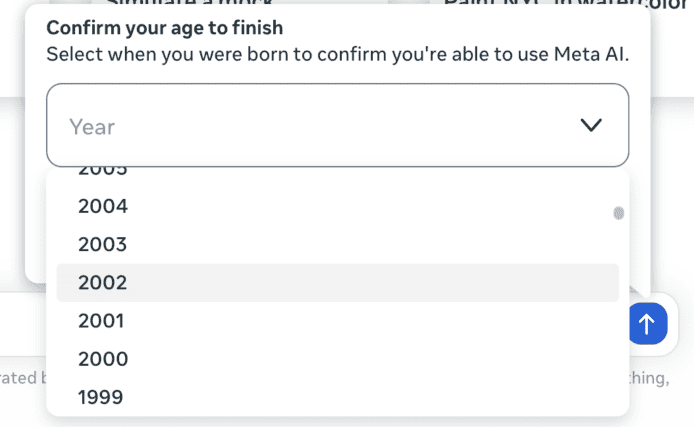

Setting an environment variable in Lambda is an option in the Environment Variables section. Azure and the Google Cloud Platform Functions have a similar configuration.

For example, in python, you would read the environment variable by loading the OS package, then use it like every other variable.

import ossecret_api_token = os.environ['SECRET_API_TOKEN']

In javascript, it is using the process.env object.

const secret_api_token = process.env.SECRET_API_TOKEN

Too Much Access (And Too Little Access Control)There are two distinct risks associated with access control in a serverless environment. The first centers around the access that clients have into the serverless app and how much access the serverless app has into the systems it interacts with.

Regarding the risk of clients having too much access, it is tempting to only put the minimum criteria in place to have it perform as needed, underscored by how a serverless application is a single function. In reality, the function needs the ability to distinguish between clients that call it and only filter data so it is only allowing interaction with data that is relevant to that requestor. Whether it is automatically appending a WHERE clause onto an SQL query or including the requestor information on messages sent downstream, data leaking can lead to large public relations problems down the road if someone were to figure out they could ask for more data than is returned by default.

The second access control risk occurs when developers take the easy way out and grant the specific application being worked on access to more than required of other functions and services it needs to work. This practice is common in development tiers as the goal is to just make it work, but in today’s model with continuous integration and continuous delivery (CI/CD), it is far too easy to have excess security propagate up to production systems. A simple example would be that in development, the application is granted full access to the data store, but it only really needs the ability to select and insert. Having the extra access to the data store to update or delete may seem harmless until the day the wrong script is run against the wrong environment and you’ve lost precious customer data (like orders).

In the future, the best practice is to only grant the required access starting at the lowest tiers in development, and have separate users for each serverless function to make traceability and data integrity much easier to guarantee.

Third-Party Libraries A